Chapter 1 - Understanding AudioThe Basics: What Makes Audio Sound Well, maybe not excitations but it's all certainly about vibrations. You can probably remember this from science lessons. Something vibrates, it makes the air vibrate, the air vibrates the sensitive bones in your ear, and this sensation is registered in your brain as sound. That's it in a nutshell. Things that vibrate fast are sensed as being high-pitched, and things that vibrate slowly are low-pitched. Things that make big vibrations are loud, and so on. These vibrations can be graphically represented by a waveform: In this diagram, the 'size' of the vibrations is plotted on the vertical (y) axis and the time it was measured at is plotted on the horizontal (x) axis. If you heard this sound, it would be a clear solid note like that made by a tuning fork. A higher pitched note would have more cycles in the same amount of time - i.e. more peaks and troughs. A quieter note would be one where the peaks and troughs aren't as big. The way a natural thing such as sound is digitised is by essentially replicating the way the ear works. Instead of sensitive bones, you have sensitive material that will vibrate just like the bones in your ear do. These vibrations then (using a variety of different methods) cause an electrical current to be created. This translates the amplitude of a sound to an electrical voltage difference. Essentially what you will get is the exact same diagram as above but instead the y axis will be volts. This can then be measured and converted into binary that computers can use. This is digitisation. When sound is digitised, the waveform is reconstructed by measuring the voltage (the y axis) thousands of times a second and storing this reading in computer binary. When you record audio on your pc or when you save or listen to audio files, you may notice that the audio has two properties: bits and sample rate. The most common of these is 16bit 44.1Khz audio as found on CDs. But what do these numbers actually mean |

|||

|

Bits (or Binary digITS)- the easiest way to describe bits in audio is to compare it to video. As you may know, you can set your computer's video display to 8bit, 16bit, 32bit etc. In video terms, the bits are the amount of different colours that can be displayed. In audio, it's very similar as it's the amount of different voltages (amplitudes) that can be sampled. So, just as 8bit video only has 256 colours, 8bit audio only has 256 different amplitudes it can sample. |

||

|

Sample Rate is very simple as it's the amount of times every second that the audio is sampled or given a binary number. CDs are sampled at 44.1KHz or 44,100 times a second. That's quite a lot, really - but still nothing compared to video capture ^_^;;

Right, so now you know what 16bit 44.1KHz audio means - 65,536 possible levels measured 44,100 times a second. This system of digitising audio is known as Pulse Code Modulation (PCM) and you will notice that in audio compression settings there is almost always a setting for Uncompressed Wav PCM. This is it.

It stands to reason that assigning a digital number to an analogue signal can have its problems. Due to their only being a specific amount of 'steps' (quantization levels) that amplitudes can be assigned to there is a considerable amount of rounding errors. Obviously choosing 16bit sampling over 8bit sampling will make these rounding errors less significant as there are more possible levels, as explained earlier. However, there is another curse, which occurs when trying to give a value to a sample that is outside the digital range. A common situation for this to happen is when trying to sample an analogue signal (with your pc etc.) that is too loud and all you get is garbage noise - which is in this case an example of "clipping". The peaks and troughs of the incoming sound are beyond the range of the sampling system to the tops and bottoms of the peaks are 'clipped' which causes the garbage noise. Problems can also occur in low bit sampling with audio that is too quiet. These types of garbage noise should be avoided at all costs. If you want to increase the volume (gain) without creating garbage data, then see the section on sourcing your audio... this process is called Normalisation and I might as well tell you what it does here. In terms of audio, this is a two-stage process which is intended to maximise the volume of your audio sample. The normalisation process does the following: 1) Finds the highest amplitude of any wave in the audio 2) Amplifies the entire audio wave so that this amplitude does not exceed the maximum that can be stored in binary - i.e. without clipping. It is possible to normalise to 95% but essentially the principle is to find the maximum gain without clipping any of your peaks.... of course, if they're clipped already because the analogue input was too loud then there's nothing you can do. When you listen to a song, there is generally much more than one instrument

being played. Not only is there more than one sound but this sound is

also made up of harmonics and other audio wonders that make the instruments

and voices sound the way they do. When this is combined together in

a waveform it is alters the waveform into something quite indistinguishable

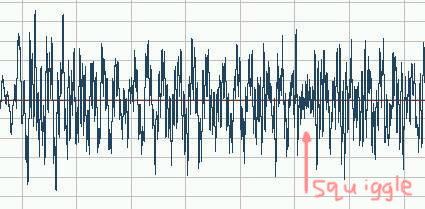

from its parts. In fact, it's a bit of a mess: |

|||

|

This is a small section from a version of Fly me to the Moon used in Evangelion. In this section we can hear a bass and vocals (but it is a very small section). The wave doesn't really show you much. There are a few recognisable patterns but because the waveforms are combined it is difficult to separate the double bass and the vocals. |

||

|

This is a longer section of the same song. In fact it's the "to the" bit of "fly me to the moon". It still doesn't show you much but if you look carefully there is a short, quiet, tight squiggle ¾ of the way along. That's a "tap" noise that's part of the jazz drumming. It is distinguishable because it's much higher pitched and hence has a much higher frequency than the bass or the vocals. In a more complex piece of music, like a rock song, this would be much more difficult to spot. | ||

|

That's the basics of how sound is digitised and what graphical representations mean. We'll have a further look later when we see how graphical representations can help you with your video. AbsoluteDestiny - June 2002

|

|||